Introduction

Welcome to CMSI 3300: your portal into the awesome world of artificial intelligence!

Before we get started, some things to note about these course notes and the site you're currently viewing:

You can add notes inside the website so that you can follow along and type as I say stuff! Just hit SHIFT + N and then click on a paragraph to add an editable note area below. NOTE: the notes you add will not persist if you close your browser, so make sure you save it to PDF when you're done taking notes! (see below) Having said that, know that there is a lot of research that suggests taking hand-written notes to be far more effective at memory retention than typing them.

The site has been optimized for printing, which includes the notes that you add, above. I've added a print button to the bottom of the site, but really it just calls your printer functionality, which typically includes an option to export to PDF.

The coursenotes listed herein will have much of the lecture content, but not all of it; you are responsible for knowing the material you missed if you are absent from a lecture.

Any time you see a blue box with an information symbol (), this tidbit is a definition, factoid, or just something I want to draw your attention to; typically these will help you with the conceptual portions of the homework assignments.

Any time you see an orange box with a cog symbol (), this tidbit is a useful tool in your programming arsenal; typically these will help you with the concrete portions of your homework assignments.

Any time you see a red box with a warning symbol (), this tidbit is a warning for a common pitfall; typically these will help you with debugging or avoiding mistakes in your code.

Any time you see a teal box with a bullhorn symbol (), this tidbit provides some intuition or analogy that I think provides an easier interpretation of some of the more dense technical material.

Any time you see a yellow question box on the site, you can click on it...

...to reveal the answer! Well done!

Here's an example box that usually contains an activity to try on your own!

Syllabus Review

During the lecture, at this point we'll review the class syllabus, located here:

What is Artificial Intelligence?

Before we can answer what artificial intelligence is, let's start with a few thought-provoking questions... starting with an "easy" one:

Q1: What is "intelligence," and what qualities do we associate with it?

There are many, many definitions of intelligence, and many of which will vary wildly depending on who you ask... here are a couple of good ones:

Pei Wang says, "The essence of intelligence is the principle of adapting to the environment while working with insufficient knowledge and resources. Accordingly, an intelligent system should rely on finite processing capacity, work in real time, open to unexpected tasks, and learn from experience. This working definition interprets "intelligence" as a form of "relative rationality."

Legg and Hutter define intelligence as a measure for "an agent's ability to achieve goals in a wide range of environments" (generalizability).

There are too many to list here; take a look at this paper for great reference.

Note, however, that many of the definitions associated with human intelligence are those that belong to cognition, the very human ability to *understand* through perception, thought, and experience.

Thus, if we're seeking to automate those processes...

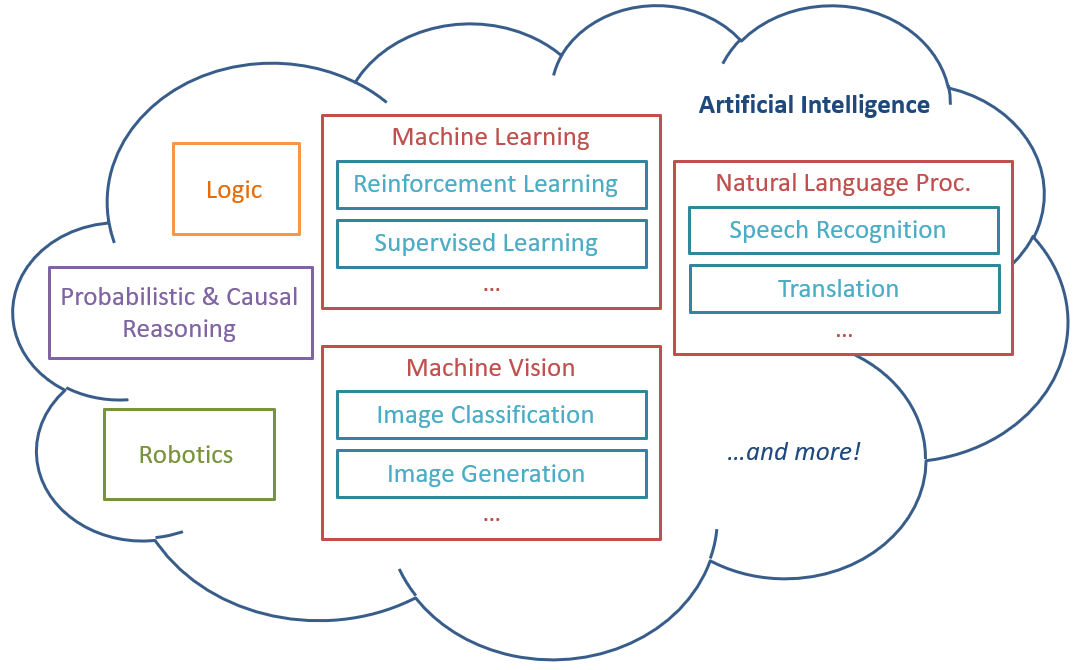

Artificial Intelligence is the umbrella term for the study and development of computer systems that can perform tasks normally reserved for human-level intellects.

Artificial General Intelligence (AGI) is the theoretical pinacle of AI in which a single agent can perform *any* task given to it at human level or beyond.

There is debate in both the AI and Neuroscientific community as to whether or not "general" intelligence even exists in humans or if it can be replicated through a single model.

So, in the meantime, we in the AI community work from the ground-up, developing modular, Artificial Specific Intelligences (ASI) that can equal or surpass humans on specific tasks.

It turns out that much of these specific intelligences mirror facets of psychological study, so this class will also reference how the two fields share a lot in common.

So, what are some facets of human intelligence for which we have subfields of AI to study? Let's brainstorm!

Examples

For each of the following links and examples, identify and discuss the human-analogous facets of intelligence that might be required to achieve the agent's specific task

Google's Deep Mind learning to walk:

25 ChatGPT Agents Play a Game:

AI Mind-Reading Using Stable Diffusion

Note how each of the above are ASIs -- you wouldn't expect, e.g., ChatGPT to drive you to the store, or DeepMind's walkers to write your code for you (*laughs nervously*)!

Types of Intelligence

The above examples represent the tip of the iceberg when it comes to the subfields of AI, so there are many we've missed, and those we've seen, we should detail:

Give some examples of some specific *parts* of human intelligence, and we'll see how those map to subfields of AI!

Important Distinction: Note that Artificial Intelligence (AI) and Machine Learning (ML) are not strictly the same thing, and are often confused depending on the context:

Classifications of algorithms: AI is an umbrella term for tasks approximating human tasks, ML is a data-driven approach to that.

Describing applications: AI generally refers to autonomous decision-makers, and ML refers to mathematical models used to make predictions.

Analogy: AI : ML :: Understanding the "Why" Behind Data : Being a Good Mimic of the Data

Interested in this distinction? Read Pearl's "The Book of Why"

We'll explore this distinction more as wee see aspects of both.

Here are some other details on subfields that you can investigate further.

Subfield |

Description |

Example Problems |

|---|---|---|

Reasoning and Knowledge Representation |

How to formalize facts and representations about the environment, and then logically reasoning about them |

Inference; common-sense logic; search; constraint satisfaction |

Planning |

How to set goals, then find and execute the necessary steps to achieve them |

Robotics; game playing agents |

Natural Language Processing |

How to autonomously extract meaning from human language |

Sentiment analysis; sentence parsing; digital assistants; speech-to-text |

Machine Vision |

How to autonomously extract meaning from digital images and video |

Robotic navigation; medical imaging; image classification |

Data Science |

How to interpret vast quantities of data and extract meaning that would escape the human eye |

Policy formation; experimental design; econometrics and business sense |

Probabilistic and Causal Reasoning |

How to reason under scenarios with partial beliefs or reasoning over systems of cause and effect |

Stochastic systems; partial visibility; observations vs. interventions vs. counterfactuals |

Learning |

How to improve performance through experience or observed example |

Reinforcement learning (RL); Deep Learning |

Note: these are major individual areas of focus in AI, but all tend to overlap in at least some points, as we will see in the coming months.

Levels of Intelligence

Independent of the different types of specific intelligence, or how they combine to elicit general intelligence, we should ask a follow-up question as to the different levels or complexities of intelligence if humans are supposedly at the top.

Q2: Are non-human animals intelligent? Are they *as* intelligent as humans?

The first part of this question might generate some controversy... the second part, not so much.

Generally, we might be able to pick apart pieces of intelligence that some animals possess akin to humans, like learning from reinforcement, but that humans are, overall, more "intelligent" or possess more capabilities that would be deemed intelligent, especially logic.

It seems, therefore, that there are different *levels* of intelligence that we can characterize, and then hope to automate like in this class.

The Pearlian Causal Hierarchy (PCH) is a stratification for the different levels of information, data, and queries that any agent (artificial or otherwise) can employ with each layer / tier subsuming the previous.

Layer/Tier |

Name |

Description |

Examples |

|---|---|---|---|

\(\mathcal{L}_1\) |

Associational |

Animalistic intelligence in which correlations are drawn between variables. Often used for prediction and imitation tasks. Most of Machine Learning lives here! |

|

\(\mathcal{L}_2\) |

Interventional |

Causal understanding of what actions lead to what outcomes, like a toddler learning to interact with its environment, or a scientist conducting an experiment. Most of Reinforcement Learning lives here! |

|

\(\mathcal{L}_3\) |

Counterfactual |

Human-level understanding of systems of cause and effect: ability to make assertions about events that are contrary to what happened in reality, thus decoupling cognition from data. |

|

Note how the vast majority of our advances in machine learning are only in the first layer -- we have created *really* good imitators, but they are typically only as good as the data that support them, the constraints on the environment, and certainly have no human-level "understanding."

In CMSI 3300, we'll see examples largely in the first 2 tiers of the hierarchy; in CMSI 4320, Cognitive Systems Design, we'll climb the whole ladder!

The PCH is named after Judea Pearl (my graduate advisor and Turing award winner </flex>) whose work in understanding causality has spanned many disciplines like the empirical sciences, econometrics, etc., but has begun to find roost in the AI sciences as well.

Worth mentioning: there are cognitive analogs for the PCH in humans; those who have read Kahneman's "Thinking Fast and Slow" know that humans have 2 modes of processing:

System 1: fast, effortless, reactionary decisions like driving a familiar route -- evolutionarily came first as we crawled out of the muck as a species.

System 2: slow, effortful, cognitive decisions like solving a math problem or planning -- evolutionarily later and more advanced, uniquely human.

Connection: we as humans have parts of our cognitive capabilities that are dumb and associational, and others that aren't, and we're able to harness both -- keep that context in mind as we're looking at individual methods in ASI design!

Challenges

Getting any of the above to work is much easier said than done, of course!

As it turns out, designing artificial intelligences is no walk in the park, and we have yet to skirt anywhere near the cohesive level of intelligence that humans achieve in their lifetimes.

Some of the many challenges addressed in arbitrary AI tasks include:

How do we model an automated system after a human one when we don't fully understand neuroscience?

How to represent knowledge?

How to model an agent's environment and its perception of it?

How to establish goals, motivation, and self-reflection, among many of the other "fuzzy" human traits?

Plainly, these are high-level abstractions for much of what must be considered in any arbitrary AI application, which will vary wildly in complexity and approach.

Food for thought: is the human intellect a composite of many separate ASIs, or one "golden recipe" for intelligence that we have yet to figure out?

These questions, and more, will be yours to solve (well, not in this class, but you'll have a good start)!

About This Class

So, with all of these exciting topics above, we should take a moment to make a meta-analysis of just what this class is, and what this class is not, because AI courses will vary from university to university.

What This Class IS

Breadth: This class will be a survey of many of the above disciplines, as well as the theories, mathematics, and algorithms that address many of their common problems and beyond.

More specialized AI courses will give proper treatments to some of the more specialized areas of research.

History: The role of this course will be to provide both a historical and practical perspective for many of the different subfields of AI, the problems they address, the theories they entail, and the work they have yet to do.

It's important to understand not just where we are in the field, but where we've come. This will give you a deeper understanding of how we've acted as toolmakers, scientists, and explorers, giving you direction to advance the frontier in a similar fashion.

Practice Meets Theory: This class does not just prepare you as practitioners of AI and ML, but theorists; you will understand the mathematics and motivations behind the tools, craft some of them by hand, but also learn to use popular toolkits that address a wide swath of real world problem.

What This Class IS NOT

Not *Just* Machine Learning / Data Science: Artificial Intelligence has come to be a tag more associated with agents that make decisions, and Machine Learning more about how those agents' policies can be acquired or how humans gain insight from some data.

Of course, we as humans have both of these capabilities, so plainly the two are intertwined.

This course looks at BOTH AI as a wholistic discipline and Machine Learning as an important substrata of that.

There are other courses at LMU that are devoted only to Machine Learning and Data Science, for which this class will well-equip you.

Not Just Deep Learning: This class is *not* a focus on deep learning and its applications (though LMU does offer courses in this).

Deep Learning has made a meteoric rise in both the AI culture and news, but has only warranted a couple weeks in our class; why?

There are many prerequisite techniques and mathematics to understand before you'll be able to fully appreciate deep learning.

Deep learning is a sledgehammer solution to many interesting problems: just because you *can* apply it, doesn't mean there isn't a simpler, less cumbersome solution to a task.

Deep learning is laden with additional requirements: most require huge data- or feature-sets, large amounts of processing, and can involve complex decompositions of learning environments to operate properly -- many such requirements are not always feasible.

Deep learning is weak on interpretability: when something goes wrong, it's extremely difficult to say what... and when something goes right, it's sometimes difficult to say why!

As such, although deep learning is a valuable tool in the AI practitioner's arsenal, it is not the sole focus of this class, and may be yet another of the many stepping stones that our discipline takes on the road to truly understanding (and then implementing!) intelligence.

Little Philosophy: This class will *not* prominently feature philosophical perspectives on AI. This is a technical computing course.

We may happen to discuss the myriad of fascinating philosophical problems surrounding AI, but only briefly or in passing; still, great discussion can amount if you want to think / talk about these topics outside of the class as well!

This layer of discussion is better accomplished once you've learned some of the history and current tools in AI, and will feature prominently in the follow-on course, Cognitive Systems Design.

Lastly, just to temper expectations a tiny bit...

We've gotta start slow! We're not going to be inventing ChatGPT in this class.

That said, we *will* see the underlying technology and the fundamental operations that govern how Dalle works!

So... with those caveats in place... let's take our first steps!

Artificial Agents

For the bulk of this course's beginning, we will investigate the theory and algorithms used in design of artificial agents, so we should take a moment to discuss some definitions.

What does it mean to have agency?

The ability to make decisions and act autonomously in their environment.

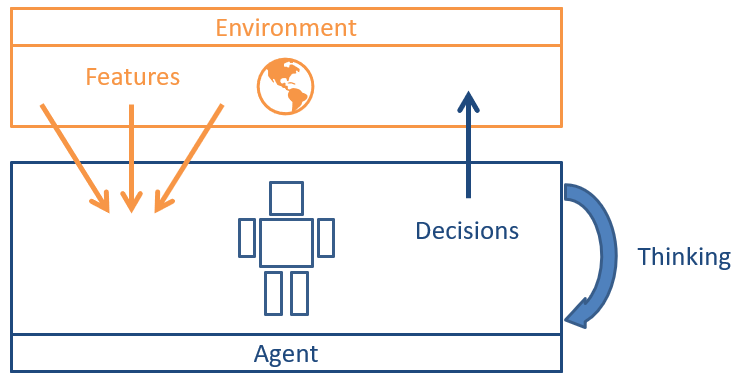

An artificial agent is therefore one that we, as programmers, imbue with agency, mapping their observed environment (as through sensors) to some behavior.

Just how we define the agent's capacities and model its environment will be a major focus throughout the course, and will differ depending upon the problems we're addressing.

So what, precisely, constitutes an agent's reasoning environment?

An artificial agent's reasoning environment consists of all objects (and possibly other agents) that shall influence the agent's decisions.

The parts of the environment that an agent perceives / attends to are generally called features.

Suppose we are designing an artificial agent to play Pacman, as in the traditional game pictured below:

What kinds of features do you think would be important for an AI-controled Pacman in its traditioanl environment?

The locations of pellets, power pellets, fruits, ghosts, etc., as these guide their goals and actions (movements around the grid)

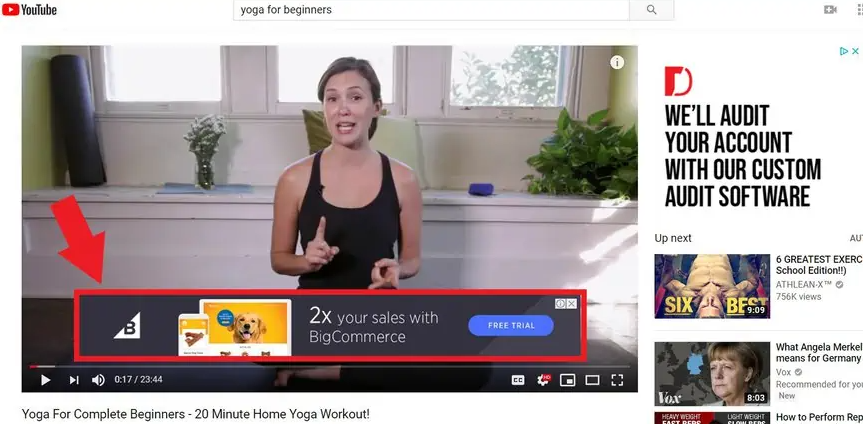

Consider a different agent whose job it is to select advertisements that it thinks viewers will like based on their user-profiles -- what would the environment, features, and actions look like here?

What a challenge the above user must have been; a business-minded yogi?

As such, for each problem we address in this course, we will make assumptions and restrictions about the environment, and then craft an agent that addresses those modeling choices.

Let's get started with one of the earliest applications of AI -- Logic!