Deadlock

Last class, we demonstrated the folly of attempting to solve the critical section problem in user-mode, and discovered that we not only made ourselves susceptible to race conditions on our "home made" lock, but also encountered the curious case where all cooperative threads appeared to be frozen.

This is, in the synchronization lingo, an instance of deadlock, just like you might encounter on the 405.

Deadlock can occur in cooperative process synchronization wherein two or more processes are mutually waiting on shared resources.

Whereas semaphores can prevent race conditions, note that deadlock can occur from their proper useage because multiple semaphore locking can be preempted between cooperating processes!

Knock one bug down and find another amirite?

Let's look at a motivating example, then how to formalize and address deadlock.

Motivating Example

Suppose we have two threads attempting to acquire locks on two system resources (e.g., 2 CD drives that both threads wish to copy from one onto the other).

Below, we avoid race conditions by using the pthread mutex locks (semaphores managed through the pthread library), but run into a problem.

Run this program a few times and see what happens -- can you deduce where the deadlock can occur? Trace the preemption that causes it.

#include <pthread.h>

#include <unistd.h>

#include <stdio.h>

void* t1f();

void* t2f();

static pthread_mutex_t m1, m2;

int main(int argc, char* argv[]) {

pthread_t t1, t2;

pthread_attr_t attr;

pthread_attr_init(&attr);

pthread_mutex_init(&m1, NULL);

pthread_mutex_init(&m2, NULL);

printf("[!] Spawning threads!\n");

pthread_create(&t1, &attr, t1f, NULL);

pthread_create(&t2, &attr, t2f, NULL);

pthread_join(t1, NULL);

pthread_join(t2, NULL);

printf("[!] All finished!\n");

}

void* t1f () {

pthread_mutex_lock(&m1);

pthread_mutex_lock(&m2);

printf("[1] Thread 1 CS Reached\n");

pthread_mutex_lock(&m2);

pthread_mutex_lock(&m1);

}

void* t2f () {

pthread_mutex_lock(&m2);

pthread_mutex_lock(&m1);

printf("[2] Thread 2 CS Reached\n");

pthread_mutex_lock(&m1);

pthread_mutex_lock(&m2);

}

Deadlock Characterization

Naturally, we should like to make determinations for when deadlock can happen -- identification is the first step to prevention!

This task begins with some formalizations:

In the system resource model, a system is viewed as a collection of finite resource types (like files, drives, and printers), that processes must (1) request, (2) use, and then (3) release.

If a system resource is unavailable upon request, a process must wait for it to be released -- and thus, deadlock becomes possible.

Interestingly, we can identify when a deadlock has the potential to occur if four, specific conditions hold simultaneously:

Mutual Exclusion: only one process may utilize a resource at any given time.

Hold and Wait: a process holding at least one resource is waiting to acquire other resources currently held by another process.

No Preemption: only the process holding a resource can release it (i.e., it must voluntarily relinquish it)

Circular Wait: a set of processes \(P_0, P_1, ..., P_n\) are each waiting for the next in sequence, and \(P_n\) is waiting for \(P_0\)

With this list of necessary conditions, we can take 1 of 3 stances on deadlock from an OS-design perspective:

Prevention requires that we remove the capacity to find ourselves in any of the above 4 states, which is typically over-restrictive from an OS' perspective and would require we remove options from the user space.

Avoidance requires a priori knowledge about a process' required resources, but then attempts to devise a plan for sharing resources that avoids deadlock. This is typically impractical but can be implemented in the application space when necessary.

Ignore it since, in general, deadlock happens quite rarely, and if it does, we will conclude that it was the programmer's fault and carry on with our day.

Which stance do you think that modern OS' take to deadlock?

Believe it or not, they take option #3 -- ignore it! The onus of responsibility for avoiding deadlock therefore falls on the shoulders of the application programmer (i.e., in user space).

That said, in scenarios (like the above) wherein we really need prevent deadlock, we need the tools to both detect it as a possibility, and then hopefully avoid it at the programmatic level.

Deadlock Avoidance

To avoid deadlock at the programmatic level, we must first detect when it is possible and then avoid it when detected.

Let us start with the detection component and then see how we might move from there.

Deadlock Detection: Resource Allocation Graphs

A resource allocation state can be represented graphically, depicting which processes are requesting, and which currently possess, different system resources.

The graphical depiction of a resource allocation state has the following interpretation:

We can use such a Resource Allocation Graph (RAG) to detect deadlock by seeing if it is possible to enter into a circular wait.

Using a RAG, a safe state is one in which, for processes \(\{P_0, P_1, ..., P_n\}\) there exists a sequence in which the requests of each process can be honored such that the resources available to process \(P_i\) are sufficient from those that are released by all previous processes in the sequence.

This sequence assumes that when a process has used its requested resources, it has also properly released them.

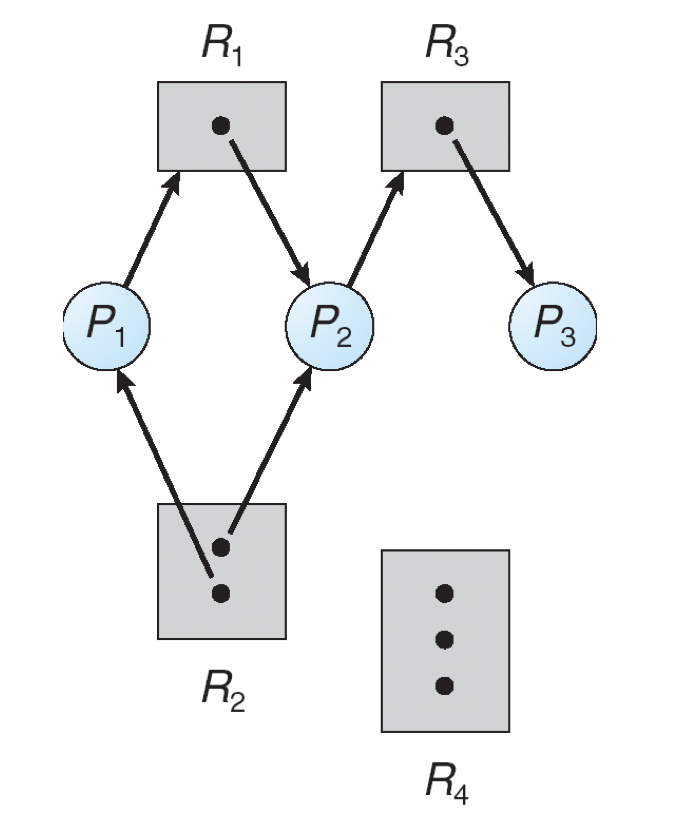

Here is an example RAG that is in a safe state; what is the order in which processes may have their requests granted to maintain the safe state?

Is the following RAG in a safe state? Why or why not?

This is a case of deadlock because there is no sequence by which the process' can have their requests honored that does not result in a circular wait.

As such, we might be tempted to conclude that in the presence of a cycle, there is always deadlock, but consider the following:

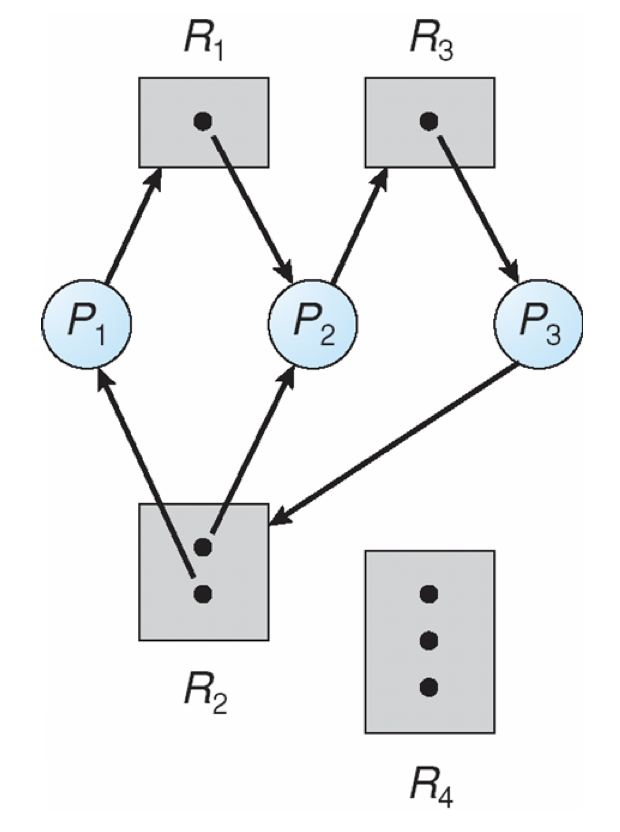

Is the following RAG in a safe state? Why or why not?

Yes, this is a safe state because we can honor resource requests in the order: \(P_2, P_4, P_3, P_1\).

As such, we can make the following conclusion:

A deadlock cannot happen in the absence of a RAG cycle, but a cycle need not imply a deadlock.

So, supposing we had such a RAG, how do we use it to avoid deadlock?

RAG Algorithm

Note that in the general formulation of a RAG, we allow resource nodes to specify that there are multiple instances of that particular resource (e.g., 2 CD drives), but as we shall soon see, it is sometimes useful to represent resources separately.

Under this restriction, RAGs can serve as predictive mechanisms for when deadlock is about to occur, and avoid entering an unsafe state.

The resource allocation graph algorithm is used for deadlock avoidance in cases where:

Resources required by a process can be claimed prior to execution.

There exists only one instance of each resource type.

In this scheme, each process indicates, a priori, a resource it may request during execution known as a claim edge, represented as a dashed arrow pointing from process to resource: \(P_i \rightarrow R_j\).

The RAG algorithm thus procedes as follows:

[Request] A claim edge is converted to a request edge once a process requests one of the resources it indicated that it may (via a claim edge).

[Allocation] The request edge is converted to an assignment edge whenever the resource is allocated to the process.

[Release] Once the process is done using the allocated resource, the assignment edge is returned to a claim edge.

The purpose of this algorithm is, of course, to avoid deadlock entirely, so we would be naive to believe that we should just grant every resource request that comes our way.

Using the formulation for RAGs given above, when should we not allocate a resource to a process requesting it?

Whenever honoring that request (i.e., converting the request edge into an allocation edge) would introduce a cycle into the graph!

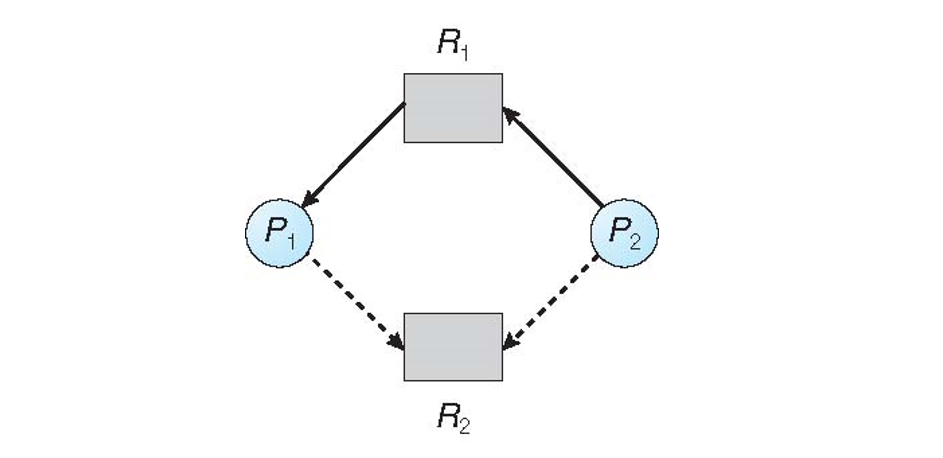

Take the following simple example:

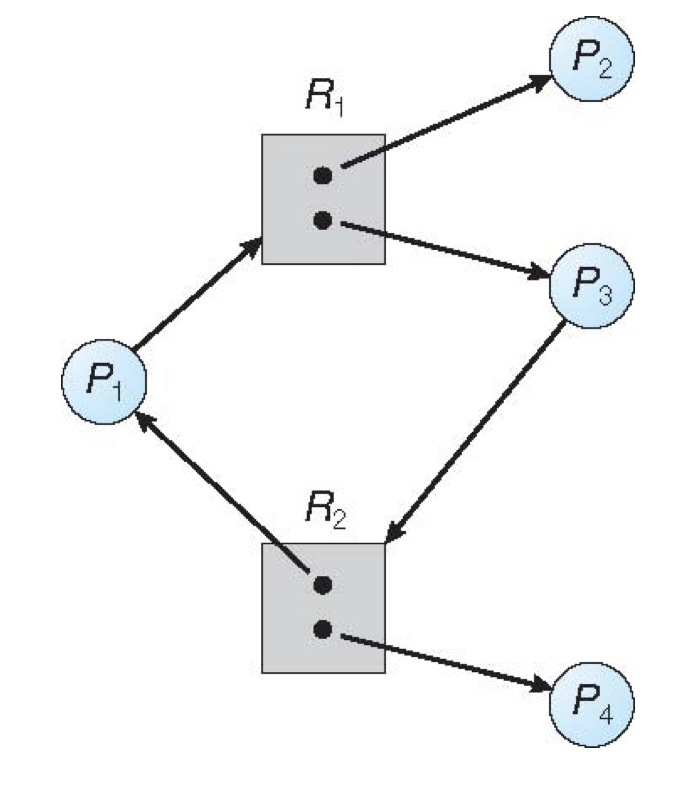

In the above:

Two processes, \(P_1, P_2\) are attempting to cooperatively share two resources \(R_1, R_2\).

\(P_1\) has been allocated \(R_1\), \(P_2\) has requested \(R_1\), and both \(P_1, P_2\) may, at some time in the future, request \(R_2\) (via the dashed claim edges).

Now that we have the state of the RAG indicated above, let's consider:

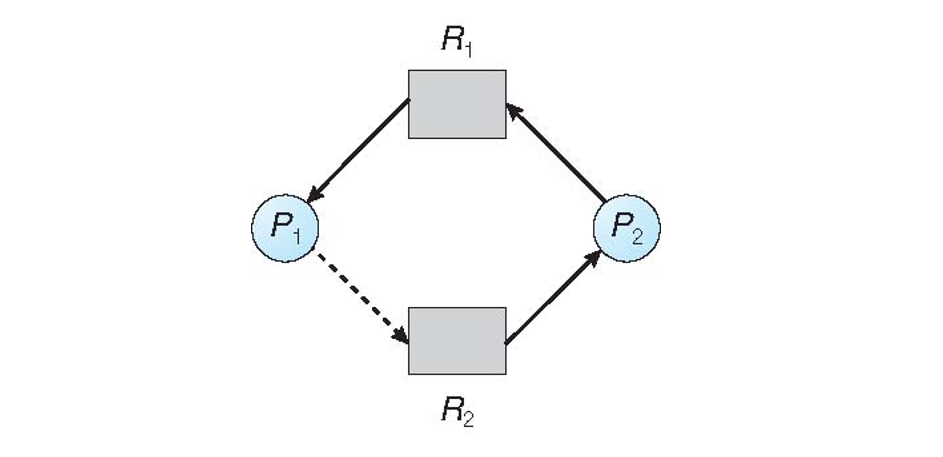

Suppose \(P_2\) requests and is allocated \(R_2\), thus converting the claim edge \(P_2 --\rightarrow R_2\) into an allocation edge (as shown below); should we honor this request?

No! Doing so would lead to deadlock via the cycle: \(P_2 \rightarrow R_1 \rightarrow P_2 \rightarrow R_2 \rightarrow P_2\)

Accordingly, we now have a means of using a RAG to avoid deadlock:

The RAG Algorithm will only allocate a resource to a process if doing so does not introduce a cycle into the RAG (since this places us in an unsafe state in which deadlock may occur).

This is a useful abstraction of what we want to programmatically implement at the application level in order to avoid deadlock, but is a special case of a more feasible approach that does not rely on the graph ADT so much as something more tractable.

We will not examine this approach, but interested parties may read about Banker's Algorithm.

Banker's Algorithm is an abstraction of the RAG algorithm in that it allows for multiple instances of a given resource type to be requested and allocated during its execution.

To do so, rather than a graph, it employs several matrices tracking the Available resources, the Max resources of each type required by each cooperative process, the Allocated resources to each process, and the Need / Requests of each process.

There are two accompanying algorithms, the safety and resource-request algorithms, to determine if a resource request should be granted or if a process will need to wait.

See your textbook for more details!

And that, folks, is all we have to say about processes in this class! Now, onwards to memory!